Artificial Intelligence can be biased in many ways: In search results, image recognition, predictive policing, criminal sentencing software. This article at the Guardian provides a useful summary and describes the main problem: Humans are biased, so software models trained on human-produced data will also be biased. Lately, I’ve been interested in how bias enters the language that we humans use, and how Voice User Interfaces and digital assistants will replicate that bias in the language that we “teach” them.

An example: If you ask Alexa to tell you about Eric Garner, she will respond, quixotically, “Eric Garner the person.” Ask her to tell you about Eric Garner the person, and she’ll say, “Eric Garner was a male celebrity who was born on September 15th, 1970, in New York City, New York, USA.” Ask 100 people on the street, and none of them will begin their answer by saying that Eric Garner was a “male celebrity.” Ask Alexa how Eric Garner died, and she’ll say, “Eric Garner died on July 17th, 2014, at the age of 43 due to hypertension, obesity, asthma, chokehold, and compressive asphyxia.” Is this how you would answer? Is this answer true? Finally, ask Alexa who killed Eric Garner, and she’ll say, “Here is something I found on Wikipedia: On July 17, 2014, Eric Garner died in Staten Island, New York City, after a New York City Police Department (NYPD) officer put him in a headlock for about 15 to 19 seconds while arresting him.” Alexa is somehow freelancing on the first two questions, but for the third, she goes to a specific source, the Wikipedia entry on Eric Garner. Is this more accurate?

Each of these three responses is problematic—for the friends and family of Eric Garner, for sure, and for Black Lives Matter and the equality movement generally, but also for the future of natural language generation, and for machine learning, and for the quest for a general artificial intelligence.

“Eric Garner was a male celebrity…”

Where does this come from? Most Wikipedia entries about notable people begin, “So-and-so is/was a such-and-such,” and if you ask Alexa to tell you about them, she’ll read off the first line or two of their Wikipedia page. “George Herman ‘Babe’ Ruth Jr. was an American professional baseball player…” “William Felton Russell is an American retired professional basketball player.” “August Wilson was an American playwright whose work included a series of ten plays, The Pittsburgh Cycle...” But Garner’s Wikipedia entry begins, “On July 17, 2014, Eric Garner died in Staten Island, New York City, after a New York City Police Department (NYPD) officer put him in a headlock…” Actually, to be more accurate, Eric Garner’s Wikipedia page first redirects to a page titled, “Death of Eric Garner.” As a matter of classification, Eric Garner doesn’t have a Wikipedia page, only his death does.

Google returns zero results on the exact phrase, “Eric Garner was a male celebrity.” So Alexa appears to be piecing the information together in some way, using online data, and statistics, and the co-occurrence of key words. She might do a date-of-death check to learn that the subject of the query is deceased, and therefore use “was” instead of “is.” Plenty of sources online record the fact of Garner’s death, and Amazon probably has settled on one authoritative source for such information. “Male” is likewise relatively straight-forward. But where does “celebrity” come from?

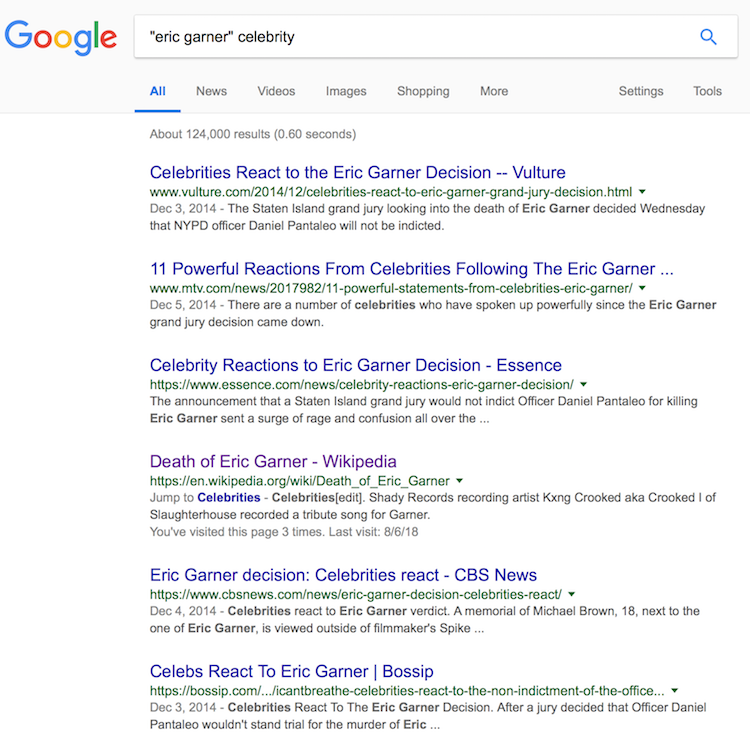

It is increasingly impossible to know exactly how a machine learning algorithm arrives at its results. But a Google search for “eric garner” and “celebrity” reveals three possible directions to explore. First, many celebrities commented on the circumstances of Garner’s death and on subsequent developments in the case, such as the grand jury’s decision not to indict Daniel Pantaleo for his death (see image below). These stories contain the word “celebrity,” and are about celebrities associating themselves with Garner. Second, some of these stories are also classified as celebrity stories by the information architecture of the sites where they appear. BET’s story “Tweet Sheet: Celebs React to No Indictment in Eric Garner Chokehold Case,” for example, is organized under https://www.bet.com/celebrities/... Third, many celebrity artists did something with Garner’s case. The Wikipedia entry for Garner’s death contains a section header on Celebrities, and notes such artistic handlings as Kxng Crooked’s (aka Crooked I) tribute song, and Spike Lee’s splicing of footage of Garner’s death into scenes from Do the Right Thing. So “eric garner” doesn’t just appear near the word “celebrity” with statistically unusual frequency, it co-occurs with many different meanings and uses of “celebrity” as well. Perhaps the analyses performed by Alexa’s algorithms reached some threshold that led to the determination that Garner was a celebrity too.

Results for Google search on 'eric garner' and 'celebrity' on 8/8/2018.

Results for Google search on 'eric garner' and 'celebrity' on 8/8/2018.

“Eric Garner died of …

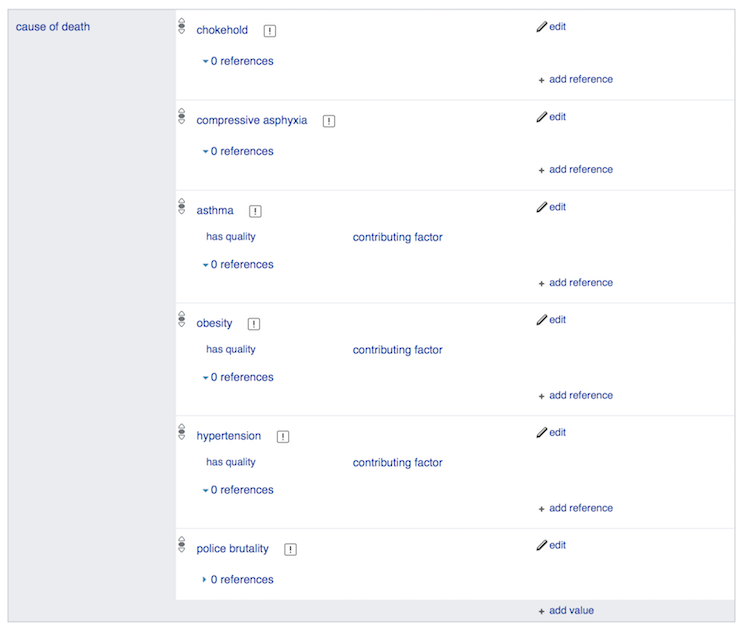

… hypertension, obesity, asthma, chokehold, and compressive asphyxia.” Where did this list come from? My best guess is this page from Wikidata. This is the only page I can find on the internet that contains all five “causes” named in Alexa’s response. But what’s the source of this information? Anyone can create a Wikidata account, and anyone can edit a Wikidata page. This list includes most of the physical causes commonly referred to in stories about Garner’s death (it excludes “heart failure,” however), but to provide this as though it were a comprehensive list of factors, without any reference whatsoever to the actions of the police officer who administered the chokehold or caused the compressive asphyxia, is neglectful at a minimum. It constructs a neutral narrative of the events of Garner’s death that removes the racial, economic, and political contexts that were also, at the least, contributing factors. Of course, it is possible to edit the Wikidata page—I added “police brutality” as a cause of death, for instance (see below), but there is no knowing when or if Alexa’s algorithm will integrate the updated information into her answer to the question, How did Eric Garner die?

Wikidata page on Eric Garner with my addition of "police brutality" as a cause of death.

Wikidata page on Eric Garner with my addition of "police brutality" as a cause of death.

Is this how we want our robots in the future to construct their understanding of the world and of world events, and then convey that understanding to human companions? Screen-enabled devices, such as Siri on your phone, will usually default to a list of search results for such queries, allowing the user to immediately see the context, and controversy, of such topics. But to the extent that a VUI like Alexa is a sign of social robots to come, her simplistic voice-only responses to difficult and politically dense factual questions will necessarily omit crucial elements of our social history.

Eric Garner died “after a New York City Police Department (NYPD) officer put him in a headlock for about 15 to 19 seconds while arresting him.”

This answer, at least, uses active voice to describe an action by a police officer that resulted in Garner’s death. And unlike the previous responses, it identifies the source of the information (Wikipedia) that Alexa is relating. But there are at least two problems with this response. The reference to Wikipedia implies some level of authority to the information being provided. Wikipedia has all the familiar shortcomings of a crowdsourced encyclopedia, but, for better or worse, it has developed a cultural standing as a first source of information on any topic. Many people use Wikipedia articles as a starting point to learning more. But for this reason alone, it is incredibly damaging that the sentence uses “headlock” instead of “chokehold.” To a large extent, the meaning of what happened to Garner comes down to whether you believe Pantaleo used a “chokehold,” an illegal restraint banned by the New York Police department since the 1990s, or a “headlock,” which can mean a form of vascular compression (or “sleeper hold”) on the sides of the neck, and is a still-approved means of subduing a suspect who is resisting arrest. In the politically charged coverage of the Garner homicide, calling Pantaleo’s action a “headlock” instead of a “chokehold” is to deny that the police did anything wrong in subduing Garner. And plenty of people believe that, but is this the one takeaway that Alexa (or Wikipedia, for that matter) should leave people with when they ask who killed Eric Garner? Because if the answer begins with “headlock” instead of “chokehold,” then no one killed him--he died of obesity, hypertension, asthma, and/or heart failure.

Most of the time, when Alexa answers your question, she is reading from a script pre-written by a human. But the holy grail of natural language generation is for artificial intelligences to “understand” language, and information, and context, well enough to write their own scripts. As we begin to see these efforts in the digital assistants in our homes, we’ll be more and more exposed to the biases of human language from which they are building their language models. Whether we’ll notice these biases remains to be seen.

Header image is from Google maps, showing the location of Garner's arrest near Tompkinsville Park on Staten Island.